Streaming Video and Compression

Conceptually, streaming refers to the delivery of a steady “stream” of media content that’s been digitized for transmission over an IP network. Streamed content can consist of prerecorded data or real-time video, like camera feeds. What complicates streaming is that most data networks were designed for the transmission and delivery of data, rather than high-quality audio and video.

Bandwidth is a measure of the capacity of a network connection. While private internal networks may not suffer from bandwidth constraints, the public internet can be limited by bandwidth capacity, although that issue is improving. Fast Ethernet (up to 100 Mbps) has been replaced by Gigabit Ethernet (up to 1 Gbps) while 10 Gigabit and 40 Gigabit networks are not uncommon in many Network Operation Centers. However, because even relatively low resolution SVGA video (800x600) can require nearly 1 Gbps, data rates for the streaming delivery of HD video still exceed the bandwidth capacity of most network connections. For this reason, streaming of both high-resolution computer graphics and full-motion HD video over networks requires compression.

Video compression uses coding techniques to reduce redundancy within successive frames. There are two basic techniques used in the processing of video compression: spatial compression and temporal compression, although many compression algorithms employ both techniques. Spatial compression involves reordering or removing information to reduce file size. Spatial (or intraframe) compression is applied to each individual frame of the video, compressing pixel information as though it were a still image.

Temporal (or interframe) compression, as the name suggests, operates across time. It compares one still frame with an adjoining frame and, instead of saving all the information about each frame into the digital video file, only saves information about the differences between frames (frame differencing). This type of compression relies on the presence of periodic key frames, called “inter” or “I” frames. At each key frame, the entire still image is saved, and these complete pictures are used as the comparison frames for frame differencing. Temporal compression works best with video content that doesn’t have a lot of motion (for example, talking heads).

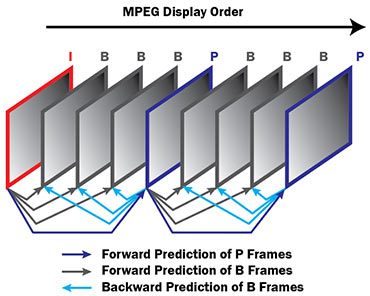

The figure below illustrates the scheme used in temporal compression. In addition to the “I” frame explained above, “P” or predictive and “B” or bi-predictive frames are used to include data from the previous and next frames. The use of “B” frames is optional.

The Motion Pictures Experts Group (MPEG) Compression technique uses a Group of Pictures (GOP) to determine how many “I”, “P”, and “B” frames are used. A GOP size of one means that a each compressed frame will consist of a single I frame. This results in a lower latency but requires more bandwidth since less compression is used.

For still-image compression, there are two widely-used standards, both developed by the Joint Photographic Experts Group (JPEG). The original standard is known by the same name as the developing organization — JPEG — and it uses a spatial compression algorithm. A more recent standard, JPEG2000, uses a more efficient coding process. Both JPEG and JPEG2000 can also be used for coding motion video by encoding each frame separately.